Batch Discrete-Time Estimation

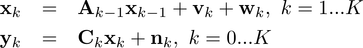

In this we consider the following problem setting:

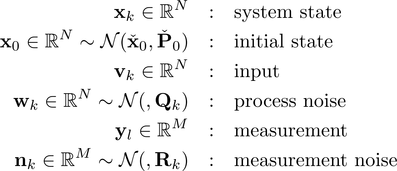

where k is the discrete time index and K its maximum value. The first equation represents the motion model, and the second the observation model. The variables have the following meaning:

These variables are all random variables with the exception of the input which is deterministic (and known as it comes from our controller). Although our goal is to know the state of the system at all times, we only have ccess to the initial state knowledge, the inputs and measurements and we must use this information to get the best possible estimate of the system state (if observable). Let us now define the batch state estimation problem as in the book "State Estimation for Robotics" by Timothy D. Barfoot:

The problem of state estimation is to come up with an estimate, $\hat{\mathbf{x}}_k$, of the true state of a system at one or more timesteps, $k$, given knowledge of the initial state, $\check{\mathbf{x}_0$, a sequence of measurements, $y_{0:K,meas}$, a sequence of inputs, $\mathbf{v}_{1:K}$, as well as knowledge the system's motion and observation models.

We will start the introduction of batch estimation by formulating the batch linear-Gaussian (LG) estimation problem. There are two main approaches to solve this problem, namely through:

The problem of state estimation is to come up with an estimate, $\hat{\mathbf{x}}_k$, of the true state of a system at one or more timesteps, $k$, given knowledge of the initial state, $\check{\mathbf{x}_0$, a sequence of measurements, $y_{0:K,meas}$, a sequence of inputs, $\mathbf{v}_{1:K}$, as well as knowledge the system's motion and observation models.

We will start the introduction of batch estimation by formulating the batch linear-Gaussian (LG) estimation problem. There are two main approaches to solve this problem, namely through:

- Bayesian Inference: update a prior density over the states with our measurements in order to produce a posterior (Gaussian) density over states.

- Maximum A Posteriori (MAP): employ optimization to find the most likely posterior state given the information that is available (initial state, measurements, inputs).

Maximum A Posteriori

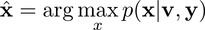

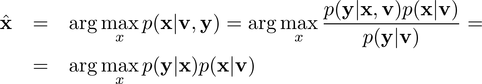

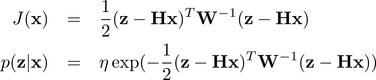

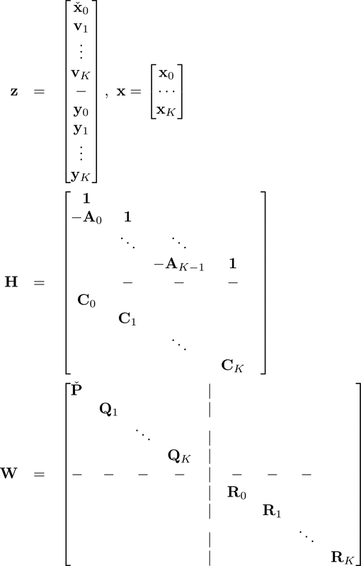

In batch estimation, the goal is to solve the following Maximum A Posteriori (MAP) problem:

where the variables correspond to the whole sequence from 0 to K. By rewriting the MAP estimate using Baye's rule:

Importantly the noise variables are uncorrelated. Through a set of derivations detailed that are excellently described in ("State Estimation for Robotics" by Timothy D. Barfoot), the problem can be casted as the following minimum energy one:

Since J(x) is a paraboloid we can find its minimum in closed form by simply setting the partial derivative wrt the design variable (the state) to zero. Essentially, the batch linear-Gauissian problem can be viewed as a mass-spring system. The optimal posterior solution is the one that corresponds to minimum energy state.

References and further readings:

- Timothy D. Barfoot, "State Estimation for Robotics", Cambridge press

Note: This part of the online textbook is not finalized yet!