Assigned Student Projects

Fall 2019

|

Project 1: Micro Aerial Vehicle Landing Pad for Unmanned Ground Vehicles

Description: This project aims to develop a landing pad for Micro Aerial Vehicles (MAVs) to be ferried and integrated onboard Unmanned Ground Vehicles (UGVs). The envisioned landing pad should allow for the MAV to land securely on it, contain a mechanism to allow it to be released and integrate supporting electronics that would assist the MAV landing process. Indicative examples may involve an up-facing camera system capable of tracking the relative position of the MAV and communicating this information to the robot, appropriate patterns to be detected by the sensors onboard the flying vehicle, or other methods. Communication from and to the landing pad from the MAV is also required.

Download detailed project description. |

|

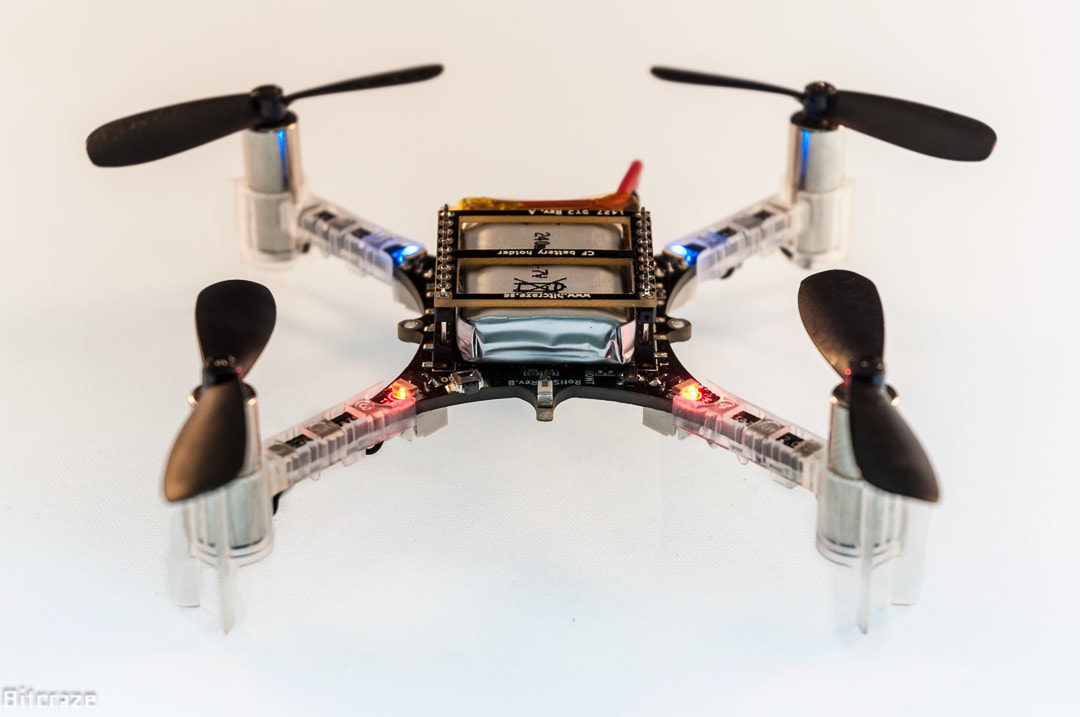

Project 2: Micro Sensing for Ultra Micro Drones

Description: In this project the goal is to investigate alternative sensing methods for ultra micro drones (<200g airframe and battery) to enable them to navigate with as much autonomy as possible. Indicative sensing options may include a) extremely small cameras and micro processing, b) extremely small thermal cameras and micro processing, c) tactile sensors, d) combinations of the above and more. The goal is to design one integrated "micro-drone" sensing solution to then be considered for a variety of systems.

|

|

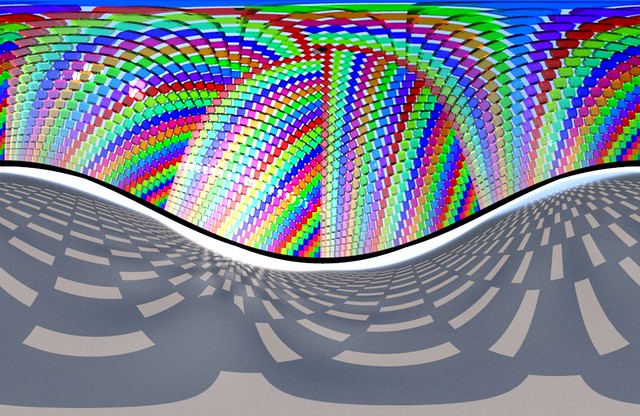

Project 3: Messing up with Visual-Inertial Navigation

Description: Aerial robotic systems heavily rely on visual-inertial navigation for autonomous operation in GPS-denied environments. This projects asks the question: what should we integrate in our rooms/facilities to confuse the onboard perception capabilities of such robot and make the pose estimation process to fail? In other terms, what are the visual patches, lights, and other - ideally subtle - components a certain facility may be possible to integrate to render it inaccessible for autonomous navigation of flying vehicles utilizing vision.

|

Spring 2018

- Project 1: Localization in smoke-filled environments through ultrasound sensing

- Task 1: Literature review

- Task 2: Proposition of Hardware System and Research Direction

- Task 3: Hardware prototype implementation

- Task 4: Localization algorithm development

- Task 5: Method and system verification

- Task 6: Extensive evaluation and report

Initial literature elements:

- Drumheller, Michael. "Mobile robot localization using sonar." IEEE transactions on pattern analysis and machine intelligence 2 (1987): 325-332.

- Brown, Russell G., and Bruce Randall Donald. "Mobile robot self-localization without explicit landmarks." Algorithmica 26, no. 3-4 (2000): 515-559.

- Varveropoulos, Vassilis. "Robot localization and map construction using sonar data." The Rossum Project 10 (2005): 1-10.

Download camera data.

Project 2: Target/object-tracking through computer vision

Description: The ability of detecting an object (or target) of interest and tracking its motion is fundamental for multiple aerial robotic applications. In this project the goal is to investigate the potential of computer vision methods that employ a single camera to track a moving target onboard a moving platform (the aerial robot). The required result of the project is an accurate derivation of the centroid of a certain user-defined object in the camera frame, while the object itself is moving. Furthermore, the algorithm should be able to deal with phases of short-term occlusion, predict the trajectory of the object and re-detect once it appears again in the camera frame.

- Task 1: Literature review

- Task 2: Proposition of Hardware System and Research Direction

- Task 3: Camera integration in ROS and development of testing case (tracked object etc)

- Task 4: Object tracking algorithm development

- Task 5: Method verification

- Task 6: Extensive evaluation and report

Initial literature elements:

- Baker, Simon, Ralph Gross, and Iain Matthews. "Lucas-Kanade 20 years on: a unifying framework." (2003).

- Tomasi, Carlo, and Takeo Kanade. "Detection and tracking of point features." (1991).

- Yilmaz, Alper, Omar Javed, and Mubarak Shah. "Object tracking: A survey." Acm computing surveys (CSUR) 38, no. 4 (2006): 13.

Download the data.

Project 3: Human detection through IR Vision

Description: In many search and rescue applications, the task is to identify where humans are and to register them on a map. In this process, the task of human detection is particularly important. Currently, deep learning algorithms present excellent performance in detecting humans on visible camera frames when the posture of the human is similar to that of the training data (e.g., a standing pedestrian). However, this accuracy drops suddenly when the human posture is different (e.g., lying down) or the person is within a very cluttered scene (e.g., a victim of an earthquake within the remainings of a collapsed building). A natural alternative is to employ thermal vision. In this project, the goal is to develop efficient computer vision algorithms that can enable human detection from lightweight low-resolution IR cameras.

- Task 1: Literature review

- Task 2: Understanding IR camera data

- Task 3: Human Detection on IR frames algorithm development

- Task 4: Method verification

- Task 5: Extensive evaluation and report

Presentation of your Project:

Each team should present their progress presentation in class on April 11. Please use this template and prepare ~10 slides for your project. Select one or more of your colleagues and team members to do the presentation.

Spring 2016

Team 1: Development of an automated Fixed-Wing UAV [Download presentation]

Team 2: Development of a Multirotor Aerial Vehicle capable of Autonomous Navigation [Download presentation]

Team 3: Monocular, hardware-synchronized, Camera-IMU Localization [Download presentation]

Team 4: Monocular, software-synchronized, Camera-IMU Localization [Download presentation]

Team 5: Aerial Robot Swarming subject to Communication Constraints [Download presentation]

Team 6: Hardware-in-the-Loop Fixed-Wing Control [Download presentation]

Team 7: Large baseline Stereo Camera Depth Sensing [Download presentation]

Team 8: Agile Multirotor Flight and Control through Smart Devices [Download presentation]

Team 9: Simulation-based Control of Multirotor Aerial Vehicles [Download presentation]

Team 2: Development of a Multirotor Aerial Vehicle capable of Autonomous Navigation [Download presentation]

Team 3: Monocular, hardware-synchronized, Camera-IMU Localization [Download presentation]

Team 4: Monocular, software-synchronized, Camera-IMU Localization [Download presentation]

Team 5: Aerial Robot Swarming subject to Communication Constraints [Download presentation]

Team 6: Hardware-in-the-Loop Fixed-Wing Control [Download presentation]

Team 7: Large baseline Stereo Camera Depth Sensing [Download presentation]

Team 8: Agile Multirotor Flight and Control through Smart Devices [Download presentation]

Team 9: Simulation-based Control of Multirotor Aerial Vehicles [Download presentation]