ARL: SubT-Edu

SubT-Edu is a collection of resources from the Autonomous Robots Lab that aim to further enable students to perform research relating to subterranean robotics. The resources below should be used in conjunction with the extensive resources released as part of the DARPA Subterranean Challenge virtual circuits. Please get information wrt those at: https://subtchallenge.com/ and https://github.com/osrf/subt. Below we make explicit reference to some of the resources released by the SubTChallenge community and also provide links to other resources released by Team CERBERUS. The current theme of "SubT-Edu" is path planning, while soon we will also release resources relating to reinforcement learning in subterranean environments.

Research ResourcesOpen-Source Packages for Exploration Path Planning in Subterranean Environments

We have created a virtual machine (using VirtualBox) for you who want to test the abovementioned planners - alongside all their dependencies with respect to volumetric mapping and more - quickly. To test using the virtual machine:

Coming next: subt-rl-edu | resources for reinforcement learning to navigate in subterranean settings. |

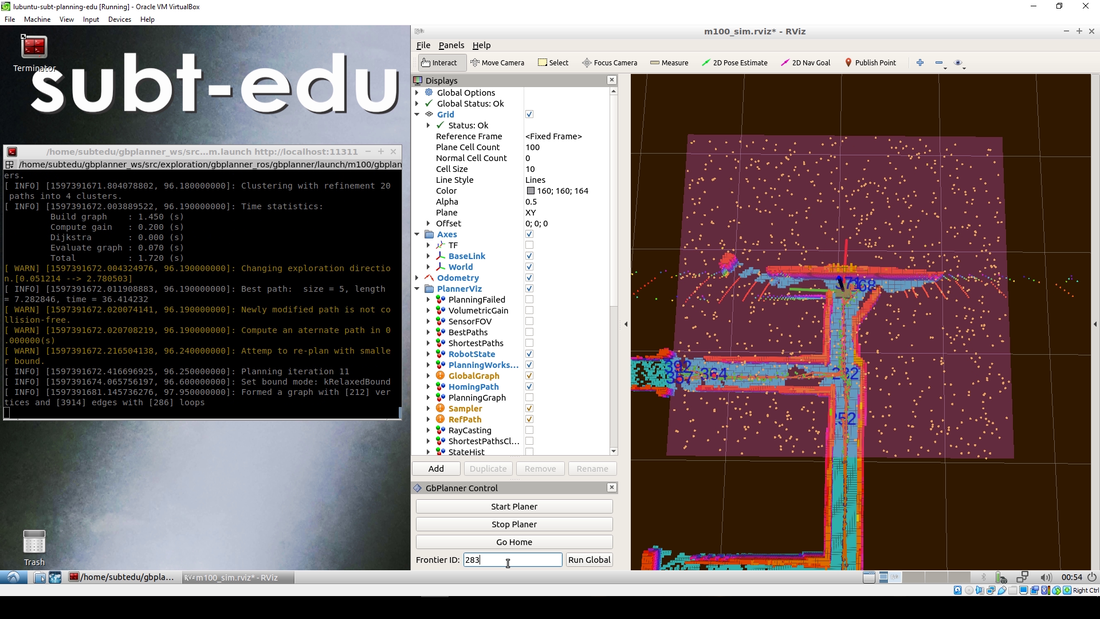

Instance of the utilization of the provided virtual machine to test our exploration path planners.

Relevant talk of Tung Dang in the PX4DevSummit during summer 2020.

|

Quick Installation and Test Instructions

|

GBPlanner Workspace Setup (Melodic)

|

Indicative Results in Simulation

Indicative result on how one can use gbplanner in simulation. This results is absolutely incomplete. It is a short video only to show the local exploration and frontier re-positioning.

|

|

MBPlanner Workspace Setup (Melodic)

|

Indicative result on how one can use mbplanner in simulation. This results is absolutely incomplete. It is a short video only to show the local exploration and homing.

|

Work using results and developments of Team CERBERUSTeam CERBERUS participates in the DARPA Subterranean Challenge. CERBERUS deploys a combination of walking and flying robots with an effort to autonomously explore and search the underground environments with efficiency and resilience. Our team puts emphasis on the performance of our system-of-systems solution and at the same time at the rapid advancement of the science in the domain. For the latter we believe that long-term benefits come from open-sourcing a significant subset of the methods developed. Visit the repositories of our partners such as:

|

Work using any SubTChallenge Robot

|

You benefit from the community as a whole and freely select any of the DARPA Subterranean Challenge released robot models available at: https://subtchallenge.world/openrobotics/fuel/collections/SubT%20Tech%20Repo

Among those, you can pick some of the released CERBERUS robots, namely:

|

The DARPA Subterranean Challenge has released multiple and diverse robots one can pick to utilize.

|

Utilize Data to Enable your Research and Training

Below we provide data from field experiments with the goal to enable research investigations in the domain of subterranean robotics.

|

Thermal-Inertial Underground Dataset

This dataset contains thermal images either a FLIR Tau2 or a FLIR Boson (16-bit format), VN100 IMU measurements and in some cases pointclouds from a Velodyne PUCK Lite LiDAR or an Ouster OS1-64. The provided datasets were collected during the autonomous flight experiments of our robot using an on-board computer running Ubuntu 16.04 and ROS Kinetic at a) the Comstock underground mine in Northern Nevada, b) an active gold mine in Northern Nevada, c) an Urban Parking Lot, as well as d) our Autonomous Robots Arena. All datasets contain both sensor data, as well as intrinsic and extrinsic sensor calibrations (YAML files). Downloads

An indicative result from this dataset is presented below. |

Graph-based Exploration Path Planning

This dataset contains data relating to the autonomous exploration of underground mines in the U.S. and Switzerland using both flying and legged robots. The data were collected through autonomous operations using the Graph-based Exploration Path Planner (GBPlanner) and as such can be used to verify the planner operation or to reproducibly compare our method with alternatives strategies of the state-of-the-art or new and novel research. Downloads

An indicative result from this dataset is presented below. |

|

Learning-based Exploration Training Dataset

This dataset contains the training and inference data to allow to replicate and verify our work on utilizing imitation learning to deploy a learned policy capable of autonomous exploration of underground mines without the need to access a consistent online built 3D map. The method is instead only relying on a sliding window of observations. Downloads

An indicative result from this dataset is presented below. |

|

Localization and Mapping Underground

|

A major challenge for subterranean autonomy relates to the process of resilient Simultaneous Localization And Mapping (SLAM). The conditions in underground environments can be particularly challenging with cases of degradation including a) geometric self-similarity, b) darkness and lack of visual features, c) dense obscurants such as dust and smoke and more. We are spending a major part of our activities in this exact problem. Although we as a lab have not released yet a relevant open-source software package for our methods we would like to highlight that we emphasize on multi-modal sensor fusion. Accordingly we publish in that direction and a multitude of relevant papers can be found in the Publications section of our website. The following two papers are the most illustrative examples of the methods running onboard our robots in Team CERBERUS (legged and flying) to solve this problem:

|

|

- S. Khattak, D. H. Nguyen, F. Mascarich, T. Dang, and K. Alexis, "Complementary Multi–Modal Sensor Fusion for Resilient Robot Pose Estimation in Subterranean Environments", International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 2020

- S. Khattak, C. Papachristos, K. Alexis, "Keyframe-based Thermal-Inertial Odometry", Journal of Field Robotics, 2019, 1–28, https://doi.org/10.1002/rob.21932

- ROVIO (Robust Visual Inertial Odometry): https://github.com/ethz-asl/rovio

- OKVIS (Open Keyframe-based Visual-Inertial SLAM): https://github.com/ethz-asl/okvis_ros

- libpointmatcher (a modular library implementing the Iterative Closest Point (ICP)): https://github.com/ethz-asl/libpointmatcher

Seeing better Underground

Whatever the selection of your camera, unless you go extreme, it is very likely that at least as long as visible-light sensors are considered, you will face significant challenges to perceive far away when in a subterranean setting. This can be despite spending quite some power on onboard illumination. Simply the environments can be very dark and possibly you do not have any surface close by or you care to see further when no surface is nearby. Pay attention to the true raw image information your camera provides. We highly advise you take few of your frames, open any image processing tool and start playing with applying filters. One could simply go ahead and implement something as simple as histogram equalization, the slightly more applicable CLAHE (Contrast Limited Adaptive Histogram Equalization) or give a try to our tool for low-light image enhancement. Here are some useful links:

- Image Brighten ROS package: https://github.com/unr-arl/image_brighten_ros

- OpenCV CLAHE documentation: https://docs.opencv.org/3.4/d6/db6/classcv_1_1CLAHE.html

Watch a Selected Talk or check a result from our group

We have selected some works of ours that we believe can help in your effort to develop on top of our methods and we provide relevant talks and results links.

The associated paper details can be found in the Publications section of our website.

The associated paper details can be found in the Publications section of our website.

|

|

|

|

Stay tuned and be Involved

As we proceed in our activities we plan to support the community of subterranean robotics, and broadly the effort in resilient autonomy in high-risk settings, with more resources. We seek to collaborate with research teams and students sharing common interests. Especially if:

- You are trying to use any of our work in your problem

- You have interest to use CERBERUS robots or CERBERUS-based software to participate in the DARPA Subterranean Challenge Virtual Circuits

- You have interest in the domain of perception-degraded localization and mapping

- You have interest in the domain of single- or multi-robot exploration

- You have ideas about novel robots for subterranean - and other high risk - environments