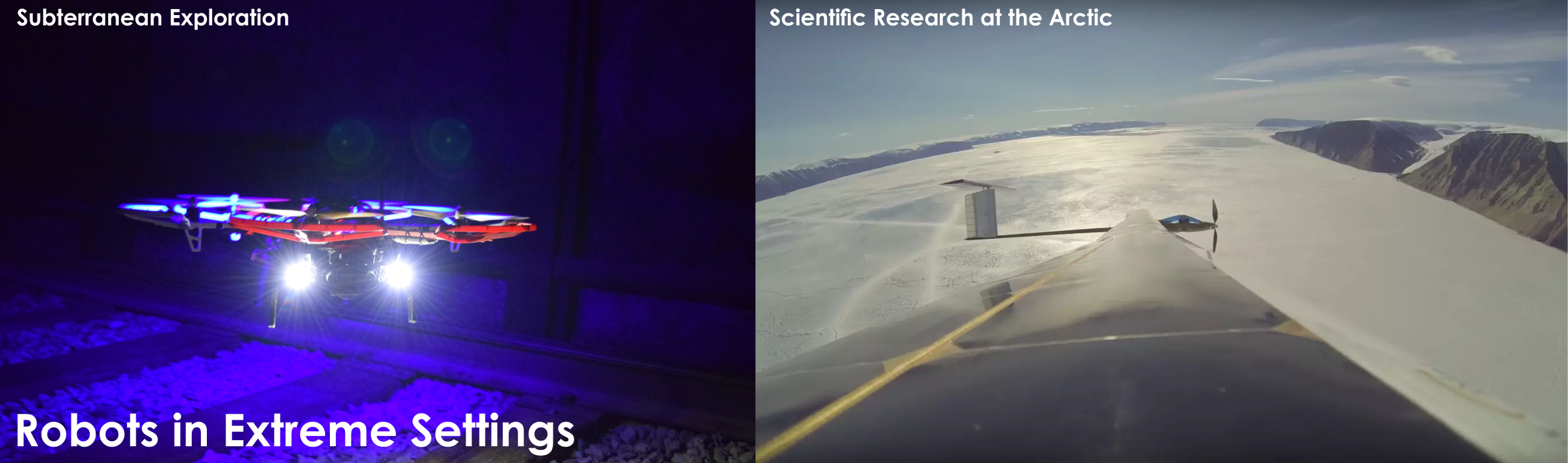

Tutorial Outline:

- Overview of indicative scenarios of extreme aerial robot navigation

- Multi-modal SLAM and autonomous exploration in underground environments

- Agile single- and multi-robot navigation in cluttered settings

- Multi-hour autonomous flight and environmental monitoring over the arctic region

- Remaining challenges, future research directions and opportunities

Tutorial Summary: Progress in autonomous aerial robots has enabled their wide utilization in a variety of important applications such as infrastructure monitoring or precision agriculture. However, at the same time their truly ubiquitous utilization or their integration in the most challenging environments and important use cases depends on their ability to navigate in extreme conditions. In this tutorial we consider two main examples, namely a) subterranean navigation for rotorcraft aerial robots (individually operating or as teams), and, b) longendurance

fixed-wing flight over the Arctic. In that context this tutorial overviews the required advances in robotic perception, state estimation, planning, control and vehicle design that enable autonomous systems to seamlessly navigate, explore and map within such challenging environments.

From a technological standpoint the focus is on three major challenges: a) degraded sensing, b) austere navigation, and c) long-term autonomy and endurance. In terms of degraded sensing emphasis is on environments that either exteroceptive or proprioceptive sensing (or both) may provide weak and illconditioned information. Characteristic examples include those of dark tunnels and caves or dust- and smoke- filled mines, as well as long-term autonomous flight above the Arctic subject to weak magnetometer readings and poor GNSS satellite geometry. By austere navigation, we particularly emphasize complex underground environments such as mines with narrow orepasses, tunnels and more. Finally, in terms of long term

autonomy we refer to systems that, on one hand possess extended endurance capabilities, and on the other hand have robust state estimation, control and planning that allows them to reliably operate for extended periods of time.

The tutorial begins its presentation and discussion from the research experiences of its organizers that includes: a) extensive autonomous exploration and mapping missions inside visually-degraded (darkness, haze conditions mines and tunnels using aerial robots, b) agile navigation using micro aerial robots within cluttered environments, and, c) multi-hour solar-powered UAV flight in the Arctic region for environmental research purposes. Additional experience in the following areas is also considered: a) multi-modal

Simultaneous Localization and Mapping through camera, LiDAR and IR vision fusion, b) belief- and saliency- aware autonomous exploration, c) agile flight control, d) multi-robot teaming with simultaneous ultra-wide band-based localization, e) specialized aerial robot design for aggressive flight, f) long-endurance solar-powered unmanned aircraft design, and, g) robust state estimation over the Arctic zone. Beyond the presentation of current and previous results, the tutorial contributes to organizing and defining the core research directions that can allow for flying robots to present advanced levels of robustness, resiliency and multi-agent reconfigurability when operating in the most extreme conditions and environments, for example those found in subterranean settings.

Intended Audience:

- Graduate students in computer science, electrical engineering, mechanical and aerospace engineering

- Researchers in robotics, computer vision, machine learning and flight control systems.

- Industry representatives in the fields of mining, search and rescue, surveillance and environmental monitoring.

Organizers:

- Kostas Alexis, Autonomous Robots Lab, University of Nevada, Reno, [email protected]

- Mark Mueller, HiPeRLab, University of California, Berkeley, [email protected]

- Christos Papachristos, Autonomous Robots Lab, University of Nevada, Reno, [email protected]

- Thomas Stastny, Autonomous Systems Lab, ETH Zurich, [email protected]

RSS Feed

RSS Feed